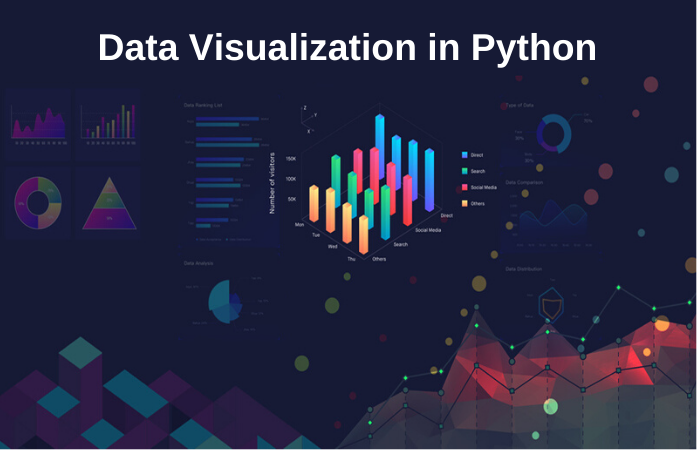

Exploring Python Visualizers for Data Visualization

In today’s data-driven world, visualizing information effectively is crucial for extracting insights. Python visualizers, including libraries and frameworks, empower data scientists and analysts to create insightful graphical representations of complex datasets. This article delves into the key aspects of Python visualization, exploring its tools, methodologies, and applications to enhance data interpretation.

Introduction to Data Visualization

Data visualization is the graphical representation of information and data. By using visual elements like charts, graphs, and maps, data visualization tools provide an accessible way to see and understand trends, outliers, and patterns in data. In today’s data-driven world, harnessing the full potential of complex datasets has become crucial for decision-making and strategy development across various industries.

The significance of data visualization lies in its ability to convert large and complex datasets into a visual context, thereby simplifying the comprehension of hard-to-grasp data. This enhances the user’s ability to detect correlations, trends, and patterns that might not be evident in raw data. For businesses and researchers alike, it facilitates the identification of areas that require attention or improvement, substantiates the factual basis of decisions, and helps in predicting future trends.

Types of data visualizations widely employed in industries range from simple bar, line, and pie charts for basic data representation, to more complex heat maps, bubble charts, and tree maps for multidimensional data. For temporal data, time series plots and sequence charts are commonly used, whereas geographical data are best represented through cartograms and geospatial maps. These visual forms enable analysts and stakeholders to digest large volumes of information at a glance and make informed decisions swiftly.

Python, a versatile programming language, stands at the forefront of facilitating these data visualization needs. It’s favored in the field due to its readability, simplicity, and the powerful libraries it possesses for data analysis and visual representation, including Matplotlib, Seaborn, Plotly, and Bokeh, among others. The ease with which Python allows users to create complex visualizations is unparalleled. Its extensive libraries are designed to handle the rigors of various data visualization needs, from statistical graphs to interactive plots that can be used in web applications. Additionally, the strong, active community around Python offers extensive resources, including tutorials, forums, and pre-built code snippets, which drastically reduce the learning curve for data visualization tasks. All these attributes make Python an indispensable tool in the arsenal of data scientists and analysts aiming to unlock the full potential of their data through effective visual representation.

Why Use Python for Visualization

In the wake of an introduction to the significance and types of data visualization, it’s essential to delve into the reasons behind the widespread adoption of Python as the go-to language for this purpose. Python’s simplicity, coupled with its exhaustive range of libraries and remarkable community support, makes it an ideal choice for both beginners and advanced programmers looking to convert complex datasets into insightful visual representations.

One of the most compelling benefits of using Python for data visualization is its simplicity. Python’s syntax is clear and intuitive, making it accessible for users with varying levels of programming expertise. This simplicity ensures that analysts and data scientists can focus more on analyzing data and less on wrestling with the complexities of programming.

Furthermore, Python boasts a wide array of visualization libraries such as Matplotlib, Seaborn, Plotly, and Bokeh, each with its strengths and specialized use cases. These libraries offer a versatile toolkit for creating static, animated, and interactive visualizations, which can cater to a broad spectrum of data visualization needs. From simple histograms to complex 3D models, Python’s visualization libraries enable users to present data in the most effective way possible.

The vibrancy and support of the Python community also stand as a significant advantage. An abundance of tutorials, forums, and documentation is available, facilitating easier learning curves and quicker problem-solving. This active community constantly contributes to enhancing library capabilities and introducing new technologies, ensuring that Python remains at the forefront of data visualization innovation.

Python has excelled in numerous use cases across various fields, including academia, finance, marketing, and technology. For instance, in finance, Python is used extensively to visualize stock market trends, while in marketing, it helps in analyzing customer behavior patterns. The flexibility in visual representation that Python offers enhances decision-making and strategy development across these diverse sectors.

In sum, the simplicity, the comprehensive range of visualization libraries, and robust community support render Python an unparalleled tool in the realm of data visualization. As we move into exploring key Python libraries for data visualization in the following chapter, it’s evident that Python’s ecosystem is uniquely equipped to address the diverse needs and challenges of visualizing complex datasets effectively.

Popular Python Visualization Libraries

Building upon the foundational understanding of Python’s significance in data visualization, it’s crucial to delve into the specific libraries that make Python a powerhouse for data interpretation and representation. Among the plethora of available tools, Matplotlib, Seaborn, Plotly, and Bokeh stand out for their unique capabilities, ease of use, and flexibility in catering to diverse visualization needs.

Matplotlib is often recognized as the grandfather of Python visualization libraries, offering a solid foundation for creating static, animated, and interactive visualizations in Python. Its strength lies in its ability to provide a comprehensive array of basic plotting functionalities, such as line charts, scatter plots, and histograms. This versatility makes Matplotlib a go-to library for users looking to grasp the basics of data visualization or for instances requiring highly customizable plots. Despite its steep learning curve, the control it offers over every aspect of a figure makes it an invaluable tool for researchers and analysts aiming for precision in their plots.

Seaborn builds on Matplotlib’s robust foundation to offer a more advanced interface for generating complex visualizations with ease. It stands out for its ability to handle statistical plotting effortlessly, providing a high-level interface for drawing attractive and informative statistical graphics. Seaborn is especially effective for data exploration and understanding data relationships, making use of internally calculated statistics to produce enhanced visual representations. This library is ideal for users seeking to create more sophisticated plots with less code compared to Matplotlib.

Plotly, unlike the previously mentioned libraries, excels in creating interactive, web-based visualizations. Its strength lies in its ability to produce highly interactive plots that can be embedded in web apps or Jupyter notebooks, facilitating a dynamic data exploration experience. Plotly’s syntax is intuitive, and it supports a wide range of charts, including 3D charts and maps, which are not as easily achieved with Matplotlib or Seaborn. This makes Plotly particularly useful for projects aimed at a broader audience, requiring interactive elements to engage users and convey complex datasets effectively.

Bokeh, similar to Plotly, emphasizes interactive plotting and streaming data, suitable for creating highly interactive visualizations and dashboards that can be deployed to web browsers. What sets Bokeh apart is its ability to handle large and streaming datasets, making it an excellent choice for real-time data applications. It offers a simple syntax, which, when combined with its powerful outputs, makes it an attractive option for both beginners and experienced developers aiming to build sophisticated interactive visualizations.

Choosing between these libraries depends largely on the specific requirements of the project, such as the complexity of the data, the need for interactivity, and the intended audience. For static, highly customizable plots, Matplotlib and Seaborn are exceptional choices. For web applications and projects requiring dynamic, interactive visualizations, Plotly and Bokeh offer compelling features that can significantly enhance data insights and user engagement. Transitioning into the next chapter, we will dive deeper into how to get started with Matplotlib, exploring its capabilities through practical examples to firmly ground readers in utilizing one of Python’s core visualization tools.

Getting Started with Matplotlib

Matplotlib, as outlined in the previous chapter, stands as a cornerstone in the landscape of Python visualization libraries, favored for its versatility and efficiency in creating a wide array of plots and graphs. Delving into Matplotlib’s functionality, this chapter provides a practical guide to employing its capabilities in crafting basic data visualizations. Emphasis is placed on line plots, scatter plots, and bar charts, which serve as fundamental tools in the data analyst’s arsenal for drawing meaningful insights from data.

**Getting Started with Line Plots**: Line plots are quintessential for visualizing data trends over a period. To create a line plot in Matplotlib, one begins by importing the library with `import matplotlib.pyplot as plt`. Following this, the plotting process involves specifying the data points. For instance, plotting stock prices against time would require defining time as the `x-axis` and prices as the `y-axis`. The command `plt.plot(time, prices)` initiates the plot, which is then displayed with `plt.show()`.

**Exploring Scatter Plots**: Scatter plots are pivotal for observing the relationship between two variables. The simplicity of crafting a scatter plot using Matplotlib further underscores its utility. Invoking `plt.scatter(x, y)` creates a plot where each point represents an observation with coordinates (x, y). This form of visualization is particularly effective in identifying correlations, outliers, or clusters within the data set.

**Delving into Bar Charts**: Bar charts offer a visual comparison among different entities or categories. Through Matplotlib, these can be effortlessly generated using `plt.bar()`. This function requires the specification of bars’ positions and their heights as arguments. For enhanced comprehension, labeling each bar with the respective category it represents promotes clarity.

The process of creating these visualizations underscores the simplicity and power of Matplotlib. Each function provided by Matplotlib, from `plot` to `scatter`, and `bar`, is designed with a focus on ease of use while maintaining the flexibility for customization, such as adding titles, labels, and legends through functions like `plt.title()`, `plt.xlabel()`, and `plt.ylabel()`. These embellishments are not mere aesthetic upgrades but serve to encapsulate complex data insights into an accessible format, thus bridging the gap between data analysis and data interpretation.

In transitioning from the foundational visualizations crafted with Matplotlib to the exploratory and statistical graphics enabled by Seaborn, the subsequent chapter aims to elevate our visualization capabilities. Through Seaborn’s abstraction over Matplotlib, it promises a streamlined approach to more intricate visual compositions, such as heatmaps and violin plots, which are invaluable for extracting nuanced insights from complex datasets.

Advanced Visualizations with Seaborn

Building upon our exploration of data visualization in Python, having covered the foundational concepts and practical applications of Matplotlib in the preceding chapter, we now turn our attention to Seaborn, a potent library that extends Matplotlib’s capabilities for statistical data visualization. Seaborn differentiates itself by abstracting away much of the complexity involved in creating sophisticated visualizations, allowing analysts and scientists to delve deeper into their data with less code.

One of the key benefits of using Seaborn is its ability to handle Pandas data structures efficiently, integrating seamlessly with the data manipulation tools that many data professionals have come to rely on. This synergy between data manipulation and visualization facilitates a more streamlined workflow, enabling insights to be drawn more directly from raw data.

Seaborn shines particularly when it comes to creating complex visualizations such as heatmaps and violin plots. A heatmap in Seaborn can be generated with a single function call, yet it allows for a rich layering of information, portraying the magnitude of phenomena through variations in color. This type of visualization is invaluable for spotting patterns and correlations in datasets, offering a quick, intuitive grasp of the data’s structure and peculiarities.

Violin plots, on the other hand, represent another advanced visualization that Seaborn simplifies. These plots provide a deeper understanding of the distribution of data, merging aspects of the box plot with kernel density estimation to show peaks in data distribution. This is particularly useful when comparing distributions between different categories or groups, allowing for nuanced interpretations of the data that go beyond what is possible with simpler plots.

The concise code required to create these complex visualizations with Seaborn represents a significant advantage. Instead of focusing on the intricacies of plotting, users can direct their attention towards analyzing and interpreting the data. This ease of use does not come at the expense of customization; Seaborn plots are highly customizable, giving users the ability to tweak them to suit their specific needs or aesthetic preferences.

In the context of our discussion on Python visualizers, Seaborn stands out as a natural progression from Matplotlib, offering enhanced capabilities for statistical data visualization with an emphasis on simplicity and efficiency. As we continue to explore the landscape of Python visualization tools, we’ll next turn our attention to interactive visualizations with Plotly, which introduces a new dimension to data exploration by making plots dynamically responsive to user input, further enriching the data analysis process.

Interactive Visualizations with Plotly

Interactive Visualizations with Plotly:

Building on the foundation of creating advanced visualizations with Seaborn, we transition into the realm of interactive data exploration through Plotly. Unlike the static charts produced by libraries such as Seaborn and Matplotlib, Plotly introduces a dynamic component to data visualization, enhancing user engagement and data comprehension. The significance of interactivity in data visualization cannot be overstated. It allows users to hover over data points for more detailed information, zoom in and out of charts, and even filter data on-the-fly. This level of interaction is invaluable for in-depth data exploration and making data-driven decisions.

Plotly, a versatile library in Python, excels in creating interactive charts and dashboards. Getting started with Plotly is straightforward. Users can create stunning, interactive visualizations with just a few lines of code. For example, creating a simple line chart involves importing Plotly’s graph_objects module, crafting the data and layout specifications, and then calling the plot function to render the visualization in a web browser. This simplicity, combined with the power of interactivity, makes Plotly an exceptional tool for data scientists and analysts.

The power of Plotly extends beyond simple charts. It supports a wide array of chart types, including 3D charts, geographical maps, and even animated visualizations. Each of these can be customized extensively, allowing the creation of highly detailed and tailored visualizations that cater to specific data analysis needs.

The interactive nature of Plotly charts serves two main purposes: enhancing user engagement through direct interaction with data and facilitating a deeper understanding of the data. When users can manipulate the data they are viewing, they are more likely to spot trends, outliers, and patterns that static charts might not reveal. This active engagement with data fosters a more intuitive grasp of the information being presented, which is crucial for effective data exploration and analysis.

Following this discussion of interactive visualizations with Plotly, our exploration of data visualization techniques will venture into the specific area of geospatial data visualization with Folium. This transition highlights the broad spectrum of visualization capabilities available in Python, from statistical charts and interactive plots to rich, geographic data presentations. Folium, much like Plotly, offers interactive features but focuses on mapping and geographical data, providing a seamless bridge between data visualization and the physical world represented by such data.

Geospatial Data Visualization with Folium

Building on the foundation of interactive visualizations explored in the previous chapter through Plotly, we delve into the specialized domain of geospatial data visualization using Folium. Geospatial data, by its very nature, is inherently linked to geographical coordinates. Folium, a powerful Python library, leverages the strengths of Leaflet.js to offer a wide array of tools for creating interactive maps that can bring geographical datasets to life.

At the heart of Folium’s capability is its ability to create rich, interactive maps that can be easily integrated into Python applications. Users can zoom in and out, pan across regions, and view detailed information about specific locations—all through the intuitive interactivity that web maps provide. For instance, plotting a set of latitude and longitude points on a map to indicate locations of interest, such as retail outlets, schools, or wildfire incidents, becomes a task of minimal complexity with Folium.

One exemplary application of Folium is in the visualization of real-time datasets. Consider a dataset tracking the spread of an infectious disease across different regions. By representing this data on a map, with circles whose sizes correspond to the number of cases, viewers can immediately grasp the geographical spread and intensity of outbreaks. The interactive nature of the map, a feature emphasized in the previous chapter’s discussion of Plotly, further allows users to click on specific data points (e.g., circles representing cities) to obtain more detailed information, such as numbers of recoveries or active cases.

Beyond real-time tracking, Folium’s applications span across various domains including environmental management, where maps can illustrate changes in land use or deforestation over time, and in urban planning, where maps can help visualize traffic patterns, public transport networks, or the demographic breakdown of different neighborhoods.

To create a basic map with Folium, one would start by importing the Folium package and then instantiate a Map object by passing in a set of geographical coordinates (latitude and longitude) that determine the initial focal point of the map. Further customization allows the addition of markers, lines, shapes, and even popups that display text or images when interacted with. The resulting map is not only a visual representation but an interactive canvas that invites exploration and engagement.

This focus on geospatial data visualization with Folium complements the prior discussion on interactive visualizations by offering a tool specifically optimized for geography-related datasets. As we transition to the next chapter on Dashboards for Data Visualization, the integration of Folium maps into dashboard interfaces represents a forward step. Dashboards, which aggregate multiple visualizations into a coherent interface, benefit immensely from the inclusion of interactive maps, enriching the overall data exploration experience and providing comprehensive insights that static maps or isolated charts cannot achieve on their own.

Dashboards for Data Visualization

Building upon the exploration of geospatial data visualization with Folium, it’s essential to grasp another powerful visualization tool – Dashboards, specifically through the utilization of Dash, a Python framework. Dash empowers data scientists and analysts to create interactive, web-based dashboards that consolidate various data visualizations into a single, comprehensive interface. This seamless aggregation of data visualizations not only enriches the user experience but also amplifies the data’s narrative by offering a holistic view.

Dash, developed by Plotly, stands out for its ability to blend the simplicity of Python with the interactivity of modern web applications. The creation of dashboards in Dash revolves around two core components: Layout and Callbacks. The Layout is where the visual appearance of the dashboard is defined, employing a syntax that mirrors HTML but is powered by Python. This allows for the arranging of visual components, such as graphs and tables, in a structured format. Callbacks, on the other hand, breathe life into the dashboard, enabling interactivity. They are Python functions that are automatically triggered by interactions on the dashboard, for instance, the selection of a dropdown menu that dynamically updates the displayed graphs.

The benefits of using dashboards for data visualization are multifaceted. Firstly, they centralize disparate forms of data visualizations — be it charts, maps, or graphs — into a unified interface, making it easier for users to draw comparisons and insights without the need to toggle between separate visual outputs. This is particularly advantageous in scenarios where decision-makers need to digest complex datasets swiftly and make informed decisions. Moreover, the interactive nature of dashboards crafted with Dash enhances user engagement. Users are not just passive viewers but can interact with the data, drilling down into specifics, and filtering through layers to reach the precise information they need.

Following the establishment of dashboards as a powerful tool for data visualization, the next step involves refining these visualizations to ensure they are not only informative but effective. Transitioning into the principles and best practices for data visualization, it becomes critical to delve into aspects such as clarity, accuracy, design choices, and audience considerations. These aspects ensure that the dashboards created not only serve their purpose in aggregating data but do so in a manner that is accessible, understandable, and actionable to its intended audience.

Best Practices for Effective Data Visualization

Building on the foundation of understanding how dashboards can amplify the visual representation of data using Python frameworks like Dash, this chapter delves deeper into the principles and practices that underpin effective data visualization. Key to crafting visualizations that not only captivate but also educate the audience, is adhering to core guidelines that govern clarity, accuracy, consistency in design choices, and audience-specific considerations.

**Clarity** is paramount in data visualization. The primary goal is to make complex data understandable and accessible. This involves choosing visual elements that directly enhance the narrative you wish to express. For instance, a well-placed bar chart can illustrate changes over time more effectively than a dense table of numbers. Clarity also extends to labeling. Axes, legends, and titles should be clearly legible, providing just enough information to contextualize the data without overwhelming the viewer.

**Accuracy** in representing data should never be compromised. Misleading visualizations not only distort the true narrative but can also erode trust between you and your audience. This involves careful consideration of scale, proportion, and the use of color to depict variability without exaggerating or understating the real situation. For example, ensuring that the axis starts at zero can prevent misinterpretation of the magnitude of differences in bar charts.

The **design choices** made should always serve the purpose of the visualization. This includes choosing the right type of chart or graph that matches the data’s nature and the story you wish to convey. It also involves thoughtful application of color, which can be a powerful tool to differentiate, highlight, or group data points, but when used improperly can confuse or mislead. Utilizing contrast and negative space can draw attention to the most important parts of the data, guiding the viewer’s eye through the visualization in a logical sequence.

**Considering your audience** is crucial in tailoring the visualization to their level of expertise and interest. A visualization meant for a general audience should avoid technical jargon and focus on simplifying the data to its most impactful insights. Conversely, a specialized audience might appreciate more detail and complexity, provided it is relevant and adds value to their understanding. Understanding your audience’s expectations and prior knowledge can inform the level of detail and complexity your visualization should convey.

In conclusion, crafting effective data visualizations is an art and science that extends beyond the choice of tools and technologies, such as Python and Dash for dashboard creation explored in the previous chapter. As we anticipate future trends in the next chapter, the constant emphasis on clarity, accuracy, design, and audience-specific customization will remain critical. Innovations such as AR and machine learning offer exciting possibilities for enhancing visual storytelling, but the foundational best practices outlined here will continue to guide the creation of impactful and meaningful visualizations.

Future Trends in Python Visualization

Building on the foundation of key principles for effective data visualization discussed in the prior chapter, the future of data visualization within the Python ecosystem seems poised for exciting advancements. These advancements are largely propelled by the integration of emerging technologies such as Augmented Reality (AR) and machine learning, which promise to revolutionize visual storytelling and data exploration.

The inclusion of AR in Python visualizers is not merely a futuristic dream but a burgeoning reality. AR has the potential to transcend traditional data visualization methods by overlaying data in the real world, offering a more immersive and interactive experience. Imagine pointing your device at a retail shelf and instantly seeing overlaid sales data, customer reviews, or supply chain information, all powered by Python-based AR applications. This immersive approach can enhance understanding and engagement, making data insights more accessible and actionable.

Moreover, the incorporation of machine learning into Python visualizers heralds a new era of intelligent data exploration. Machine learning models can analyze vast datasets to identify patterns, trends, and anomalies. When these capabilities are integrated with visualization tools, users can benefit from predictive analytics and automated insights, which can be visualized in real-time. This integration can transform raw data into intuitive visual narratives, enabling users to grasp complex phenomena at a glance.

Furthermore, the synergy between machine learning and AR within Python visualizers could unlock novel visualization types. For instance, predictive visualizations in AR could project future trends directly onto physical entities, such as forecasting the impact of climate change on a geographic location in real-time.

These advancements necessitate a robust foundation in data science and programming principles, highlighting the importance of the Python ecosystem’s flexibility and the vast library support it offers. As Python continues to evolve, so too will its capabilities for data visualization, promising richer, more interactive, and insightful visual storytelling methods. This evolution will not only enhance the data science community’s ability to communicate complex data but also democratize data insights, making them more comprehensible and accessible to a wider audience. The future of Python visualizers is a testament to the limitless possibilities that emerge from the convergence of technology, creativity, and data.

Conclusions

In summary, Python visualizers play an essential role in transforming raw data into meaningful insights through graphical representations. By leveraging powerful libraries, users can enhance their analytical capabilities, making data exploration intuitive and engaging. As data complexity increases, mastering these tools becomes crucial for effective decision-making and communication in various domains.