Edge Computing in 2025 Powering Real-Time Processing at the Source

As we approach 2025, edge computing is set to revolutionize how data is processed, bringing computation closer to the source. This shift promises to enhance real-time processing, reduce latency, and improve efficiency across industries. From smart cities to healthcare, the implications are vast. This article delves into the advancements, challenges, and future prospects of edge computing, offering a comprehensive overview of its transformative potential.

The Evolution of Edge Computing

Edge computing has undergone a radical transformation since its early conceptualization in the late 2000s, evolving from a niche solution to a cornerstone of modern data processing. Initially, edge computing emerged as a response to the limitations of centralized cloud architectures, particularly latency and bandwidth constraints. Early adopters, such as content delivery networks (CDNs), leveraged edge nodes to cache data closer to users, but the true potential of edge computing remained untapped until the rise of IoT and 5G.

By 2025, edge computing will have matured into a decentralized powerhouse, driven by advancements in hardware, AI, and connectivity. Key milestones include the development of micro data centers in 2018, which brought computational resources closer to end-users, and the integration of AI at the edge in 2021, enabling real-time decision-making without cloud dependency. The proliferation of 5G networks further accelerated adoption, reducing latency to sub-millisecond levels and unlocking use cases like autonomous vehicles and smart cities.

Technological breakthroughs in edge-native chips, such as neuromorphic processors and energy-efficient GPUs, have optimized performance while minimizing power consumption. Meanwhile, the rise of serverless edge computing has simplified deployment, allowing developers to run functions directly on edge devices without managing infrastructure. By 2025, edge computing will not just complement cloud systems—it will redefine them, enabling a seamless hybrid model where data is processed dynamically at the most efficient location.

The shift toward edge computing reflects a broader trend in technology: the demand for immediacy. As industries from healthcare to manufacturing embrace real-time analytics, edge computing stands as the backbone of this transformation, ensuring that data is processed where it matters most—right at the source.

How Edge Computing Works

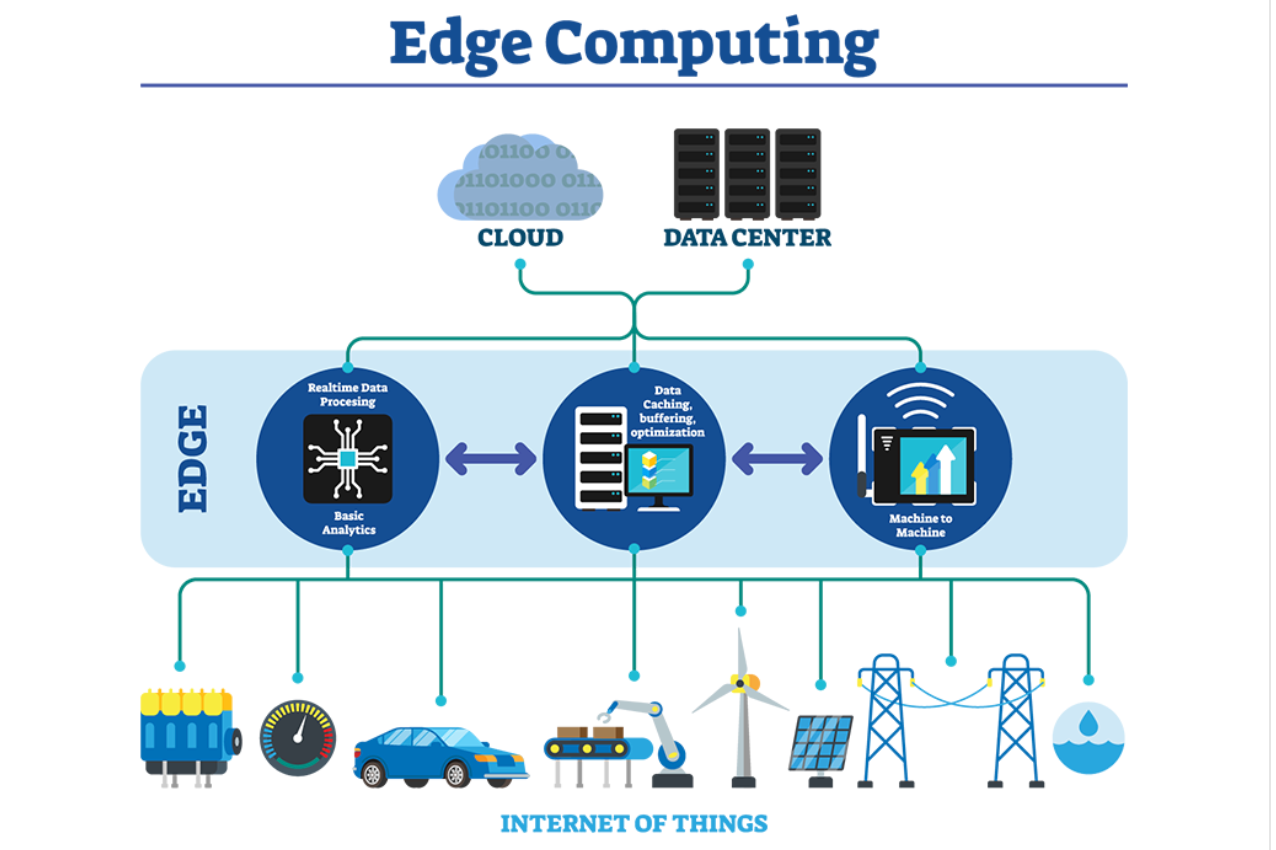

Edge computing represents a paradigm shift from traditional cloud computing by bringing computation and data storage closer to the source of data generation. Unlike cloud computing, which relies on centralized data centers, edge computing distributes processing power across a network of localized nodes—often referred to as edge devices or edge servers. This architecture minimizes latency, reduces bandwidth consumption, and enables real-time decision-making by processing data where it’s created.

The core principle of edge computing lies in its decentralized architecture. Instead of sending raw data to a distant cloud server, edge devices preprocess and filter data locally. For example, a smart factory using edge computing can analyze sensor data from machinery on-site, detecting anomalies instantly without waiting for cloud-based analytics. Similarly, autonomous vehicles rely on edge nodes to process lidar and camera data in milliseconds, ensuring immediate responses to road conditions.

Edge computing differs from cloud computing in three key ways:

- Latency: By processing data locally, edge computing eliminates the round-trip delay to centralized servers, crucial for time-sensitive applications like telemedicine or industrial automation.

- Bandwidth Efficiency: Transmitting only relevant insights—rather than raw data—reduces network congestion, a critical advantage for IoT deployments with thousands of devices.

- Resilience: Edge systems can operate offline or with intermittent connectivity, ensuring continuity in remote or unstable environments.

As edge computing evolves, hybrid models are emerging, where edge nodes collaborate with cloud systems for complex analytics. This synergy ensures scalability while preserving the speed and efficiency of localized processing—a foundation for the next wave of real-time applications in 2025.

Key Technologies Driving Edge Computing

Edge computing in 2025 is being propelled by a trio of transformative technologies: 5G networks, IoT devices, and AI algorithms. These innovations work in synergy to overcome the limitations of centralized cloud computing, enabling real-time data processing at the source.

5G networks are the backbone of edge computing, providing ultra-low latency and high bandwidth. Unlike 4G, which struggles with delays, 5G reduces response times to milliseconds, making it ideal for applications like autonomous vehicles and industrial automation. The distributed nature of 5G small cells also complements edge nodes, ensuring seamless data flow between devices and localized servers.

The explosion of IoT devices generates vast amounts of data that can’t all be sent to the cloud efficiently. Smart sensors, wearables, and industrial machines now process data locally, reducing congestion and improving response times. For example, a smart factory might use edge-enabled IoT sensors to monitor equipment health in real time, preventing downtime by triggering immediate maintenance alerts.

AI at the edge is revolutionizing how data is analyzed. Instead of sending raw data to centralized servers, lightweight AI models run directly on edge devices. This allows for instant decision-making, such as facial recognition in security cameras or predictive maintenance in wind turbines. Advances in tinyML (machine learning for microcontrollers) are making AI more efficient, even on resource-constrained devices.

The integration of these technologies creates a robust ecosystem where:

- 5G ensures rapid communication

- IoT devices collect and preprocess data

- AI extracts actionable insights locally

This convergence not only enhances performance but also addresses privacy and security concerns by minimizing data transmission. As these technologies mature, edge computing will become indispensable across industries, setting the stage for the next wave of decentralized innovation.

Applications of Edge Computing in Various Industries

Edge computing is revolutionizing industries by enabling real-time data processing at the source, reducing latency, and improving efficiency. In healthcare, edge computing powers remote patient monitoring and predictive diagnostics. Wearable devices and IoT sensors collect vital signs, process them locally, and alert medical staff to anomalies instantly. For example, edge-enabled AI can detect early signs of cardiac arrest by analyzing ECG data in milliseconds, saving critical time compared to cloud-based processing. Hospitals also use edge nodes to manage equipment maintenance, predicting failures before they occur.

The manufacturing sector leverages edge computing for smart factories, where machines equipped with sensors optimize production lines autonomously. Real-time quality control systems inspect products using computer vision, flagging defects without human intervention. Predictive maintenance at the edge minimizes downtime by analyzing vibration and temperature data from machinery, ensuring seamless operations. Edge computing also enhances supply chain visibility, tracking goods from production to delivery with minimal lag.

In transportation, edge computing enables autonomous vehicles to make split-second decisions by processing data from LiDAR, cameras, and radar locally. Traffic management systems use edge nodes to analyze congestion patterns and adjust signals dynamically, reducing urban gridlock. Logistics companies deploy edge solutions for fleet optimization, monitoring fuel efficiency and route conditions in real time.

Retailers benefit from edge-driven personalized experiences, where in-store sensors analyze customer behavior and deliver targeted promotions instantly. Similarly, energy grids use edge computing to balance supply and demand, integrating renewable sources efficiently.

By 2025, edge computing will be deeply embedded across industries, transforming operations with faster, decentralized processing. Its integration with 5G, AI, and IoT—as discussed earlier—will further amplify these advancements, setting the stage for smarter urban ecosystems, the focus of the next chapter.

Edge Computing and Smart Cities

By 2025, edge computing will be the backbone of smart cities, enabling real-time data processing at the source and transforming urban landscapes into highly responsive, efficient ecosystems. Unlike traditional cloud-based systems, edge computing minimizes latency by processing data locally—near sensors, cameras, and IoT devices—ensuring instant decision-making for critical infrastructure.

Infrastructure Optimization will see the most dramatic shift. Traffic management systems, powered by edge nodes, will analyze vehicle flow in real time, adjusting signals dynamically to reduce congestion. Smart grids will leverage edge analytics to balance energy distribution, responding instantaneously to demand fluctuations and integrating renewable sources seamlessly. Water and waste management systems will detect leaks or blockages autonomously, preventing costly damages before they escalate.

In public services, edge computing enhances safety and responsiveness. Surveillance cameras with embedded AI will identify anomalies—like unattended bags or erratic behavior—without relying on distant servers, triggering immediate alerts. Emergency services will use edge-processed data from wearables and environmental sensors to deploy resources faster during crises. Public transportation will become more reliable, with edge-enabled predictive maintenance reducing downtime and improving commuter experiences.

For urban living, the impact is profound. Residents will interact with hyper-localized services, from personalized air quality alerts to real-time parking availability updates. Smart buildings will optimize energy use based on occupancy patterns, while edge-powered AR navigation aids will guide visitors through complex spaces effortlessly.

However, as explored in the next chapter, scaling these solutions presents challenges—security risks from distributed nodes, interoperability between heterogeneous systems, and the need for robust edge governance frameworks. Yet, the potential for smarter, more livable cities makes overcoming these hurdles imperative.

Challenges in Implementing Edge Computing

Edge computing promises to revolutionize real-time data processing by bringing computation closer to the source, but its adoption faces significant challenges. One of the most pressing issues is security. Unlike centralized cloud systems, edge devices are often deployed in uncontrolled environments, making them vulnerable to physical tampering, cyberattacks, and data breaches. Ensuring robust encryption and authentication mechanisms across distributed nodes remains a complex task, a topic that will be explored further in the next chapter.

Another critical hurdle is scalability. As edge networks expand to accommodate millions of devices—from IoT sensors to autonomous vehicles—managing resources efficiently becomes daunting. Traditional cloud architectures rely on centralized control, but edge computing demands dynamic load balancing and fault tolerance across heterogeneous hardware. Solutions like federated learning and adaptive resource allocation algorithms are emerging to address these challenges, but widespread implementation is still in progress.

Interoperability further complicates adoption. With multiple vendors offering proprietary edge solutions, seamless communication between devices and platforms is often hindered. Standardization efforts, such as those led by the Edge Computing Consortium, aim to establish common protocols, but industry-wide adoption remains slow. Without universal standards, integrating legacy systems with modern edge infrastructure becomes an expensive and fragmented process.

Lastly, latency and bandwidth constraints persist. While edge computing reduces reliance on distant data centers, inconsistent network performance can still degrade real-time processing. Innovations in 5G and edge-native applications are mitigating these issues, but rural and underdeveloped areas continue to face connectivity gaps.

Overcoming these challenges requires a combination of technological advancements, industry collaboration, and regulatory frameworks to ensure edge computing reaches its full potential in 2025 and beyond.

Security and Privacy in Edge Computing

As edge computing becomes more pervasive in 2025, security and privacy concerns take center stage. Unlike centralized cloud architectures, edge computing distributes data processing across numerous nodes, increasing the attack surface. Man-in-the-middle attacks, device tampering, and unauthorized access are just a few risks threatening decentralized systems. To counter these, robust security frameworks are being deployed, integrating advanced encryption, zero-trust models, and hardware-based security.

Encryption remains a cornerstone of edge security. Lightweight cryptographic protocols like AES-256 and ChaCha20 are optimized for edge devices with limited processing power, ensuring data integrity without compromising performance. Meanwhile, homomorphic encryption is gaining traction, allowing computations on encrypted data without decryption—ideal for sensitive industries like healthcare and finance.

Authentication mechanisms have also evolved. Multi-factor authentication (MFA) and biometric verification are now standard, but edge environments demand more. Decentralized identity solutions, leveraging blockchain, enable secure device-to-device verification without relying on a central authority. Additionally, hardware security modules (HSMs) and trusted platform modules (TPMs) embed cryptographic keys directly into edge devices, preventing physical tampering.

Beyond encryption and authentication, real-time threat detection powered by AI is critical. Edge-native intrusion detection systems (IDS) analyze local traffic patterns, flagging anomalies before they escalate. Federated learning further enhances privacy by training AI models on-device, reducing the need for raw data transmission.

Despite these measures, challenges persist. The dynamic nature of edge networks complicates consistent policy enforcement, while regulatory compliance—such as GDPR and CCPA—requires adaptable frameworks. As edge computing evolves, security must remain a moving target, anticipating threats before they emerge. The next chapter will explore how these advancements lay the groundwork for edge computing’s future beyond 2025.

The Future of Edge Computing Beyond 2025

As we look beyond 2025, edge computing is poised to evolve into an even more transformative force, driven by advancements in hardware, software, and connectivity. The convergence of 5G/6G networks, AI at the edge, and quantum computing will unlock unprecedented capabilities, enabling real-time processing with near-zero latency. Edge devices will no longer be mere data collectors but intelligent nodes capable of autonomous decision-making, reducing reliance on centralized cloud systems even further.

One major trend will be the rise of self-optimizing edge networks, where devices dynamically adjust their computational load based on real-time demand. Machine learning models deployed at the edge will continuously improve through federated learning, allowing devices to share insights without exposing raw data—addressing privacy concerns raised in earlier discussions. Additionally, neuromorphic computing could revolutionize edge hardware by mimicking the human brain’s efficiency, drastically reducing power consumption while boosting processing speed.

Another frontier is the integration of edge computing with IoT ecosystems, enabling smart cities and industries to operate with seamless automation. For instance, autonomous vehicles will rely on edge nodes for instant collision avoidance, while factories will use edge-powered digital twins for predictive maintenance. The proliferation of edge-native applications will also blur the line between cloud and edge, creating hybrid architectures where critical tasks are processed locally, while less time-sensitive data is offloaded to the cloud—a theme explored in the next chapter.

Finally, sustainability will drive innovation, with energy-efficient edge devices powered by renewable sources or ambient energy harvesting. As edge computing matures, its role in enabling a decentralized, resilient, and intelligent digital infrastructure will redefine what’s possible in real-time data processing.

Edge Computing vs Cloud Computing A Comparative Analysis

Edge computing and cloud computing represent two distinct paradigms for data processing, each with unique strengths and trade-offs. While cloud computing centralizes data processing in remote data centers, edge computing decentralizes it, bringing computation closer to the data source. By 2025, the choice between these models will depend on factors like latency, bandwidth, security, and scalability.

Advantages of Edge Computing:

- Low Latency: Processing data locally eliminates the need to transmit it to distant servers, enabling real-time responses—critical for applications like autonomous vehicles and industrial IoT.

- Bandwidth Efficiency: Reducing data transfers to the cloud cuts costs and congestion, ideal for high-volume environments such as smart cities.

- Enhanced Privacy: Sensitive data can be processed on-site, minimizing exposure to external breaches.

Advantages of Cloud Computing:

- Scalability: Cloud platforms offer virtually unlimited resources, making them perfect for large-scale analytics and storage.

- Centralized Management: Simplifies updates, maintenance, and global accessibility for distributed teams.

- Cost-Effectiveness: Pay-as-you-go models reduce upfront infrastructure investments.

However, edge computing struggles with limited local processing power and higher upfront deployment costs, while cloud computing faces latency issues and dependency on stable internet connections. The optimal choice depends on the use case: edge computing excels in time-sensitive, localized applications, whereas cloud computing suits data-intensive, globally distributed workloads.

As industries evolve, hybrid models are emerging, combining edge agility with cloud scalability. Businesses must assess their specific needs to determine the right balance, ensuring seamless integration with future advancements discussed in previous chapters while preparing for the infrastructure shifts outlined in the next section.

Preparing for an Edge Computing Future

As businesses and individuals prepare for the widespread adoption of edge computing by 2025, strategic planning becomes essential to harness its real-time processing capabilities. The shift from centralized cloud models to decentralized edge architectures requires careful consideration of infrastructure, workforce skills, and deployment strategies.

Infrastructure Readiness

Edge computing demands a robust, distributed infrastructure. Organizations must invest in:

- Edge nodes: Deploying localized servers or micro-data centers close to data sources to minimize latency.

- 5G and high-speed networks: Ensuring low-latency connectivity between edge devices and central systems.

- Scalable storage solutions: Balancing on-premise and cloud storage to handle data efficiently without overloading central servers.

Skill Development

The transition to edge computing requires specialized expertise. Teams should focus on:

- Edge-native development: Learning frameworks like Kubernetes for edge orchestration and lightweight containerization.

- IoT and AI integration: Mastering real-time analytics and machine learning models optimized for edge devices.

- Security protocols: Implementing zero-trust architectures to protect decentralized data flows.

Implementation Strategies

A phased approach ensures smooth adoption:

- Pilot projects: Start with small-scale deployments in high-impact areas like manufacturing or retail to test edge efficiency.

- Hybrid models: Combine edge and cloud systems where real-time processing isn’t critical, ensuring flexibility.

- Vendor partnerships: Collaborate with edge platform providers to leverage pre-built solutions and reduce development overhead.

By addressing these key areas, businesses can position themselves to capitalize on edge computing’s speed and responsiveness while mitigating risks associated with decentralization. The future belongs to those who prepare today.

Conclusions

Edge computing in 2025 represents a pivotal shift towards decentralized, efficient, and real-time data processing. By addressing current challenges and leveraging emerging technologies, it holds the promise to transform industries and everyday life. As we stand on the brink of this technological evolution, the potential for innovation is boundless, marking a new era in computing.