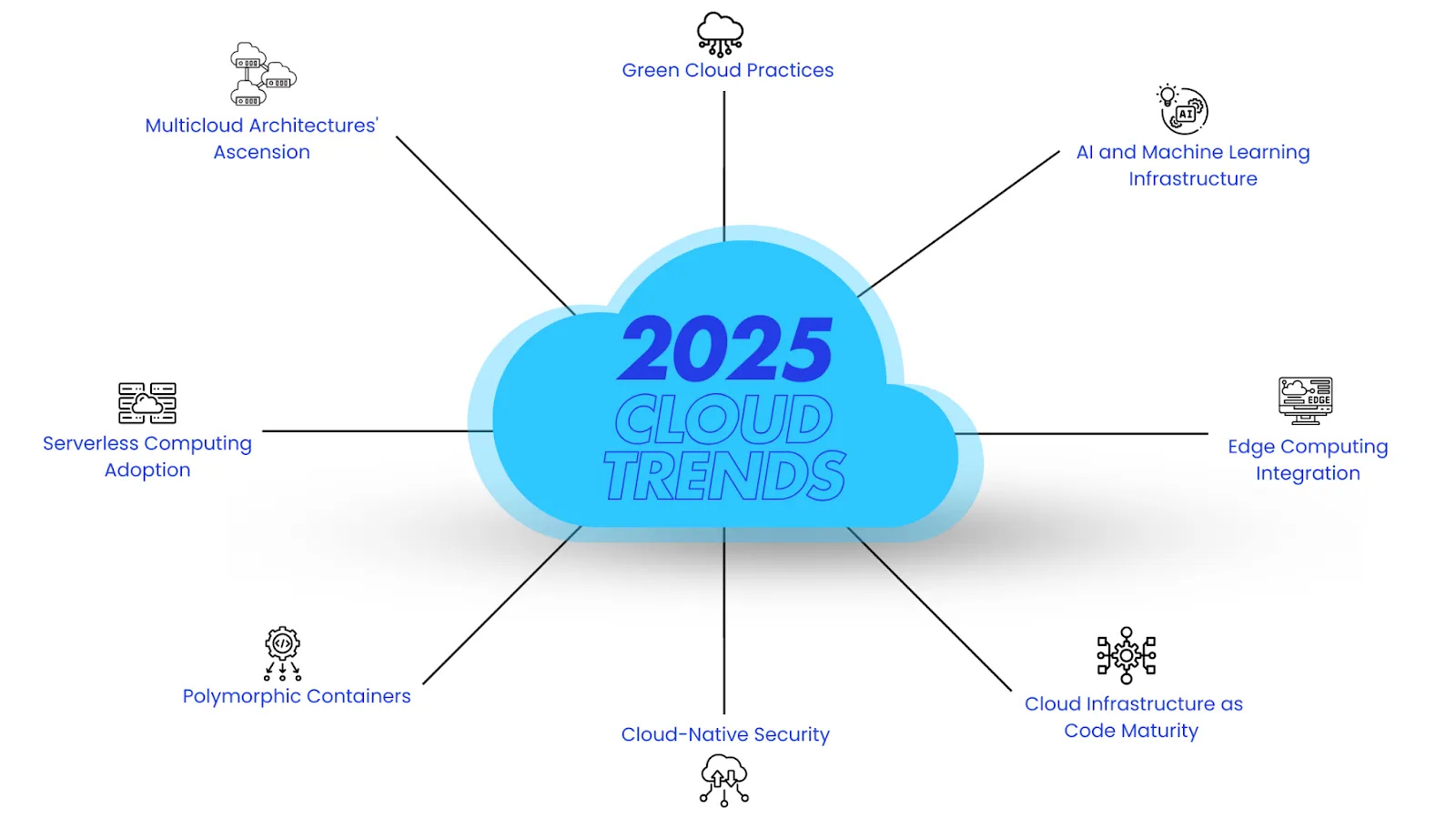

Cloud-Native & Serverless in 2025 – Trends, Technologies, and Business Impact

As we approach 2025, cloud-native and serverless technologies are pivotal in shaping the future of scalable applications. By leveraging containers, microservices, and serverless computing, organizations are positioned to innovate more rapidly. This overview explores key trends, technologies, and their profound business impact, offering insights into the evolving digital landscape.

The Evolution of Cloud-Native Technologies

The evolution of cloud-native technologies has been a cornerstone of modern application development, reshaping how enterprises build, deploy, and manage software. At its core, cloud-native leverages containers, microservices, and orchestration platforms like Kubernetes to create systems that are inherently scalable, resilient, and portable across cloud environments. By 2025, these technologies will have matured further, enabling even more dynamic and efficient workflows.

Containers, popularized by Docker, revolutionized application packaging by encapsulating code and dependencies into lightweight, isolated units. This eliminated the “it works on my machine” problem and streamlined deployment. However, managing containers at scale demanded robust orchestration—enter Kubernetes. As the de facto standard for container orchestration, Kubernetes automates deployment, scaling, and operations, ensuring applications run seamlessly across hybrid and multi-cloud setups.

Microservices architecture complements containers by breaking monolithic applications into smaller, loosely coupled services. This modularity accelerates development cycles, improves fault isolation, and allows teams to adopt polyglot programming. By 2025, advancements in service meshes (like Istio and Linkerd) will further enhance microservices communication, security, and observability, making them indispensable for cloud-native ecosystems.

The convergence of these technologies fosters cross-cloud portability, freeing businesses from vendor lock-in. Innovations like Kubernetes Federation and open-source tools such as Crossplane enable seamless workload migration across clouds. Additionally, GitOps practices—where infrastructure is managed declaratively via Git—will become mainstream, enhancing collaboration and auditability.

As cloud-native matures, the focus shifts toward optimizing developer experience and operational efficiency. Serverless computing, the next chapter’s focus, builds upon these foundations by abstracting infrastructure entirely. Together, cloud-native and serverless form a powerful duo, driving the next generation of scalable, agile, and cost-effective applications.

Serverless Computing Unpacked

Serverless computing represents a fundamental shift in how applications are built and deployed, abstracting away infrastructure concerns to let developers focus purely on code. By 2025, this paradigm will dominate scalable application development, driven by its ability to eliminate operational overhead while delivering unmatched agility. Unlike traditional cloud-native approaches that still require managing containers or orchestration layers, serverless offloads all infrastructure responsibilities to the provider, enabling true zero-ops execution.

The core advantage of serverless lies in its automatic scaling, which dynamically adjusts resources based on demand—eliminating both over-provisioning and underutilization. This not only optimizes costs but also ensures seamless performance during traffic spikes. Providers like AWS Lambda, Azure Functions, and Google Cloud Run handle everything from provisioning to patching, allowing teams to deploy features faster than ever.

Cost efficiency is another game-changer. With serverless, businesses pay only for the compute time consumed, avoiding idle resource expenses. This granular billing model, combined with built-in high availability, makes it ideal for event-driven workloads, APIs, and microservices. Moreover, the rise of serverless databases (e.g., FaunaDB, DynamoDB) and edge computing integrations further reduces latency, pushing real-time applications forward.

However, serverless isn’t without challenges. Cold starts, vendor lock-in, and debugging complexities persist, though innovations like pre-warmed instances and open-source serverless frameworks (e.g., Knative, OpenFaaS) are mitigating these issues. As cloud-native and serverless converge—hinted at in the next chapter—hybrid models leveraging containers and functions will redefine scalability. By 2025, serverless won’t just complement cloud-native; it will be the default for agile, cost-effective innovation.

Integrating Serverless with Cloud-Native

The convergence of cloud-native and serverless architectures is reshaping how modern applications are built, deployed, and scaled. While cloud-native principles emphasize containerization, microservices, and declarative infrastructure, serverless computing abstracts away infrastructure management entirely. In 2025, these paradigms are no longer competing alternatives but complementary forces driving efficiency and agility.

One of the most transformative synergies is the rise of serverless containers, blending the portability of containers with the operational simplicity of serverless. Platforms like AWS Fargate, Google Cloud Run, and Azure Container Apps enable developers to deploy containerized workloads without managing underlying servers. This hybrid approach eliminates the need for manual scaling, patching, or capacity planning while retaining the flexibility of containerized environments.

Cloud-native tooling is also evolving to embrace serverless patterns. Kubernetes, for instance, now integrates with serverless frameworks like Knative and OpenFaaS, allowing developers to orchestrate serverless functions alongside traditional microservices. This unification streamlines workflows, reduces fragmentation, and enables seamless scaling across workloads.

From a business perspective, this integration translates to faster innovation cycles. Companies can leverage cloud-native best practices—such as immutable infrastructure and CI/CD pipelines—while benefiting from serverless cost models, where billing is tied to actual usage rather than reserved capacity. The result is a more dynamic, cost-efficient development lifecycle that adapts to fluctuating demand without sacrificing reliability.

As these technologies continue to merge, the distinction between serverless and cloud-native will blur further. The future belongs to architectures that harness both—delivering the scalability of serverless with the modularity and control of cloud-native design.

The Rise of Serverless Containers

As cloud-native and serverless technologies evolve, serverless containers are emerging as a transformative model, blending the best of both worlds. Unlike traditional containers that require manual orchestration or pure serverless functions with limited runtime flexibility, serverless containers offer scalability without sacrificing control. Platforms like AWS Fargate, Google Cloud Run, and Azure Container Apps abstract infrastructure management while allowing developers to package applications in containers—delivering efficiency and ease-of-use in one package.

For businesses, this means faster innovation cycles with reduced operational overhead. Serverless containers automatically scale based on demand, eliminating the need for capacity planning. Costs align closely with actual usage, making them ideal for variable workloads. Unlike traditional serverless functions, which may struggle with stateful applications or long-running processes, containers support a broader range of use cases—from microservices to batch processing—without compromising agility.

The adaptability of serverless containers also addresses vendor lock-in concerns. Since containers are portable, businesses can migrate workloads across clouds or hybrid environments with minimal friction. This flexibility is critical as organizations adopt multi-cloud strategies (a theme explored in the next chapter) to optimize resilience and cost.

Looking ahead, advancements in cold-start optimization and event-driven integrations will further solidify serverless containers as a go-to solution. By 2025, expect deeper integration with AI/ML pipelines and edge computing, enabling real-time processing at scale. For enterprises balancing innovation with operational simplicity, serverless containers represent a pivotal step toward future-proof cloud-native development.

Hybrid and Multi-Cloud Strategies

As cloud-native and serverless architectures mature, businesses are increasingly adopting hybrid and multi-cloud strategies to maximize flexibility, resilience, and cost efficiency. These models allow organizations to distribute workloads across multiple environments—public clouds, private data centers, and edge locations—while avoiding vendor lock-in and optimizing performance. By 2025, the ability to seamlessly integrate these diverse infrastructures will be a key differentiator for enterprises scaling their applications.

One of the primary drivers behind hybrid and multi-cloud adoption is workload optimization. Certain applications benefit from the low-latency processing of edge or private clouds, while others thrive in the elastic scalability of public cloud providers. For instance, AI-driven analytics might run on a high-performance private cluster, whereas customer-facing serverless APIs leverage AWS Lambda or Azure Functions. Kubernetes and service meshes like Istio play a pivotal role in orchestrating these distributed systems, ensuring consistent deployment, security, and observability across environments.

Resilience is another critical factor. Multi-cloud architectures mitigate downtime risks by spreading workloads across providers, reducing dependency on a single vendor. Meanwhile, hybrid models enable compliance-sensitive industries to keep regulated data on-premises while tapping into cloud-native innovations. Tools like Terraform and Crossplane simplify infrastructure provisioning, allowing teams to manage hybrid deployments with declarative automation.

However, complexity remains a challenge. Organizations must invest in unified monitoring, governance, and security frameworks to maintain control. Emerging solutions, such as cloud-native application platforms (CNAPs), abstract these complexities, enabling developers to focus on innovation rather than infrastructure wrangling. As the next chapter will explore, Internal Developer Platforms (IDPs) further streamline this by standardizing workflows, bridging the gap between multi-cloud flexibility and operational simplicity.

Empowering Development with Internal Developer Platforms

As cloud-native and serverless architectures become the backbone of modern applications in 2025, Internal Developer Platforms (IDPs) are emerging as critical enablers for engineering teams. These platforms abstract the complexity of cloud infrastructure, allowing developers to focus on writing code rather than managing deployments, scaling, or security policies. By unifying workflows, IDPs bridge the gap between hybrid and multi-cloud environments—discussed in the previous chapter—and the evolving security paradigms required for distributed systems, which we’ll explore next.

IDPs consolidate essential tools—such as CI/CD pipelines, observability dashboards, and infrastructure provisioning—into a single, developer-friendly interface. This reduces cognitive load and accelerates delivery cycles, particularly for serverless applications where rapid iteration is key. For example, an IDP might automate the deployment of a serverless function across multiple cloud providers, ensuring compliance with security policies while abstracting away the underlying orchestration.

Key benefits of IDPs in 2025 include:

- Standardization: Enforcing best practices for cloud-native development, such as immutable infrastructure and GitOps workflows, across teams.

- Self-Service Capabilities: Empowering developers to provision resources on-demand without relying on platform engineering teams.

- Observability Integration: Embedding monitoring and logging directly into the development workflow, aligning with the security needs of ephemeral workloads.

As organizations scale, IDPs also mitigate the risks of tool sprawl—a common challenge in hybrid and multi-cloud setups. By providing a unified layer, they ensure consistency while maintaining the flexibility to leverage cloud-specific services. Looking ahead, IDPs will play a pivotal role in balancing agility with governance, setting the stage for the next chapter’s discussion on securing dynamic, cloud-native environments.

Evolving Security Models for Cloud-Native

As cloud-native and serverless architectures become the backbone of scalable applications in 2025, security models must evolve to address their unique challenges. The ephemeral nature of serverless functions, distributed microservices, and dynamic orchestration demand a shift from traditional perimeter-based security to zero-trust and runtime-aware approaches.

One critical trend is the adoption of identity-first security, where every workload, service, and API call is authenticated and authorized dynamically. With serverless functions spinning up and down in milliseconds, static credentials are a liability. Instead, short-lived tokens and service mesh-based mutual TLS (mTLS) ensure secure communication between ephemeral components.

Another emerging paradigm is behavioral security monitoring. Unlike traditional SIEM systems, modern tools leverage AI to analyze real-time telemetry from distributed workloads, detecting anomalies in API traffic, function invocations, or container behavior. This is crucial for mitigating risks like injection attacks or lateral movement in microservices environments.

Security also extends to the supply chain, where cloud-native applications rely on countless open-source dependencies. In 2025, automated SBOM (Software Bill of Materials) analysis and immutable artifact signing—enforced via policy-as-code—are standard practices to prevent compromised dependencies from entering production.

Finally, shift-left security is no longer optional. Developers embed security checks into CI/CD pipelines using tools like static application security testing (SAST) and dynamic analysis for serverless configurations. Platforms now auto-remediate misconfigurations in IaC (Infrastructure as Code) templates before deployment.

These innovations ensure security keeps pace with the agility of cloud-native and serverless architectures, enabling enterprises to scale confidently—setting the stage for broader industrial adoption, as explored in the next chapter.

Industrial Adoption and Business Implications

As enterprises accelerate their digital transformation journeys, cloud-native and serverless architectures have become the backbone of scalable, cost-efficient, and agile application development. By 2025, industries ranging from finance to healthcare are leveraging these paradigms to drive innovation, reduce time-to-market, and optimize operational costs.

In financial services, serverless computing enables real-time fraud detection by processing vast transaction datasets without provisioning infrastructure. Banks deploy event-driven architectures to scale dynamically during peak loads, reducing idle resource costs while maintaining compliance. Meanwhile, healthcare providers use cloud-native platforms to orchestrate microservices for patient data analytics, ensuring HIPAA-compliant interoperability across distributed systems.

Retailers harness serverless APIs and Kubernetes-powered platforms to deliver hyper-personalized shopping experiences. Event-driven inventory management systems auto-scale during Black Friday surges, eliminating over-provisioning. Similarly, manufacturing integrates IoT with serverless backends to process sensor data in real time, predicting equipment failures and minimizing downtime.

The business impact is profound:

- Faster innovation cycles: By abstracting infrastructure, teams focus on feature development, slashing deployment times from weeks to hours.

- Elastic scalability: Auto-scaling eliminates over-provisioning, with pay-per-use models cutting cloud spend by up to 70%.

- Operational resilience: Multi-cloud serverless deployments ensure fault tolerance without manual intervention.

However, success hinges on aligning these architectures with business goals. Companies like Netflix and Capital One exemplify this—using serverless to handle unpredictable workloads while maintaining security, as discussed in the prior chapter. As cloud-native adoption grows, the next chapter explores how these technologies democratize access to advanced compute power, further accelerating industry-wide innovation.

Democratization and Innovation Through Cloud Technologies

The democratization of advanced computing capabilities through cloud-native and serverless architectures is reshaping innovation in 2025. By abstracting infrastructure complexities, these technologies enable businesses of all sizes—from startups to enterprises—to experiment with cutting-edge tools like quantum computing, AI/ML orchestration, and edge intelligence without prohibitive hardware investments. Cloud providers now offer quantum processing units (QPUs) as serverless functions, allowing developers to integrate quantum algorithms into applications via simple API calls. This eliminates the need for specialized expertise or on-premise quantum hardware, leveling the playing field for innovators.

Serverless platforms further accelerate this shift by enabling pay-per-use access to high-performance computing (HPC) resources. A biotech startup, for instance, can run genomic simulations using serverless HPC clusters, scaling compute power dynamically while avoiding upfront costs. Similarly, AI model training—once restricted to organizations with GPU farms—is now accessible through serverless ML pipelines, reducing time-to-market for data-driven products.

The implications extend beyond cost efficiency. By lowering barriers to entry, cloud-native ecosystems foster a culture of rapid experimentation. Developers can prototype with blockchain, spatial computing, or real-time analytics using managed services, iterating faster than traditional infrastructure allows. Open-source serverless frameworks like Knative and OpenFaaS amplify this effect, enabling hybrid-cloud portability and avoiding vendor lock-in.

However, challenges remain. While cloud providers simplify access, optimizing performance for specialized workloads—such as quantum-resistant cryptography or federated learning—still requires deep expertise. The next wave of innovation will hinge on balancing ease of use with advanced customization, ensuring that democratization doesn’t come at the cost of capability. As the following chapter explores, this balance will define the future landscape of cloud computing beyond 2025.

The Future Landscape of Cloud Computing

By 2025, cloud-native and serverless architectures will no longer be emerging trends but foundational pillars of scalable application development. The convergence of Function-as-a-Service (FaaS) and Event-driven Architectures (EaaS) will redefine how businesses deploy and manage applications, eliminating infrastructure concerns while maximizing efficiency. The shift toward granular, event-based computing will enable enterprises to build systems that respond in real-time to dynamic workloads, reducing costs and improving agility.

Open-source solutions will play an increasingly dominant role, driving standardization and interoperability across cloud providers. Projects like Kubernetes, Knative, and OpenFaaS will mature, offering enterprises more flexibility in avoiding vendor lock-in while maintaining portability. The rise of multi-cloud serverless platforms will further blur the lines between providers, allowing developers to seamlessly orchestrate functions across AWS Lambda, Azure Functions, and Google Cloud Run without rewriting code.

Beyond 2025, we anticipate a deeper integration of AI-driven automation in serverless ecosystems. Self-optimizing functions, predictive scaling, and intelligent resource allocation will minimize manual intervention, making cloud-native applications even more resilient. Edge computing will also merge with serverless models, enabling low-latency processing for IoT and real-time analytics without centralized cloud dependencies.

The business impact will be profound—organizations will shift from cost-centric cloud adoption to value-driven innovation, leveraging serverless to experiment rapidly and scale effortlessly. However, challenges like security, observability, and cold-start performance will demand new tooling and best practices. As cloud-native and serverless technologies evolve, they will not just support but actively shape the next wave of digital transformation, making scalability and efficiency intrinsic to modern software development.

Conclusions

In 2025, the fusion of cloud-native and serverless technologies signifies a substantial leap towards the creation of resilient, scalable, and efficient digital applications. This convergence, supported by hybrid cloud strategies and innovative development platforms, embodies the future of agile and cost-effective IT infrastructure, setting a new benchmark for digital transformation.