AI vs AGI Understanding the Differences and Implications

The debate between AI (Artificial Intelligence) and AGI (Artificial General Intelligence) is more than a technical distinction; it’s a discussion about the future of technology and humanity. While AI excels in specific tasks, AGI represents a leap towards machines that can understand, learn, and apply knowledge across diverse fields, much like humans. This article delves into the nuances, challenges, and potential futures shaped by these technologies.

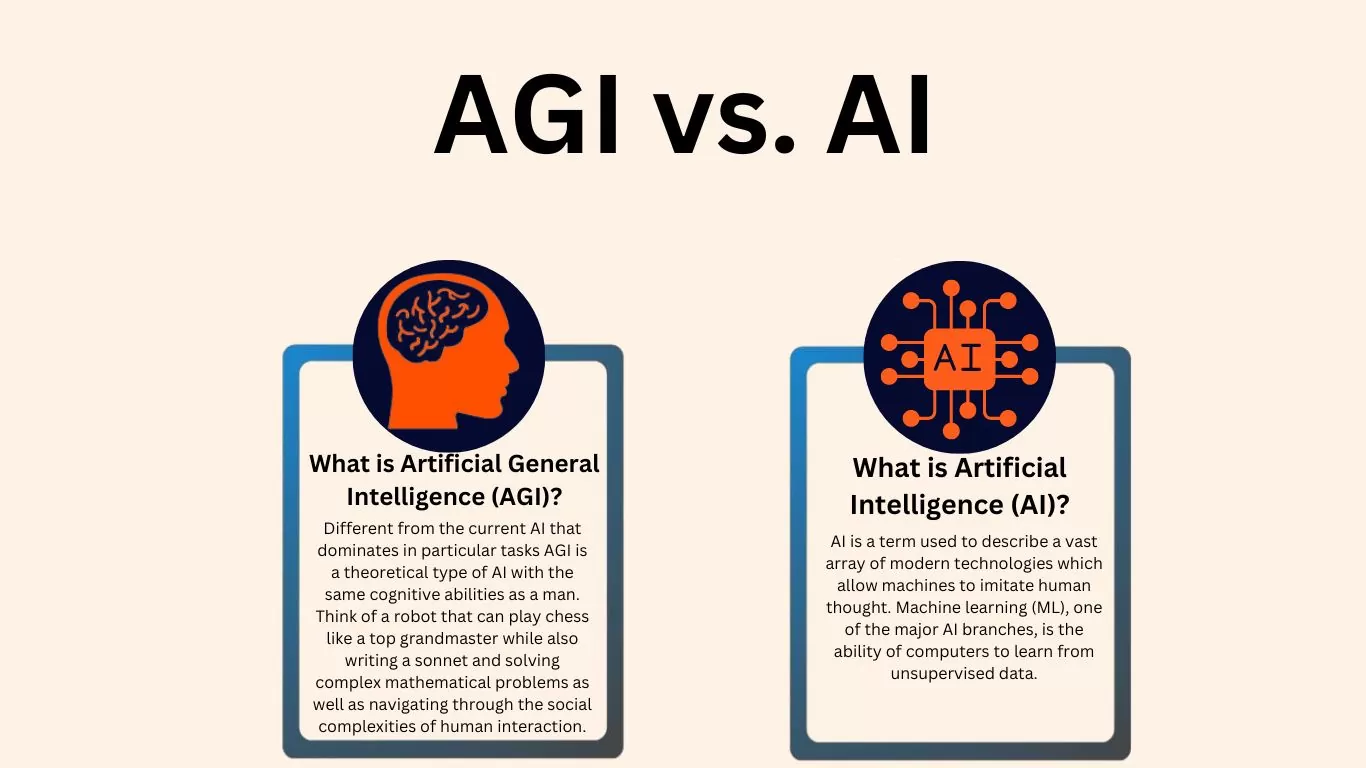

Defining AI and AGI

Artificial Intelligence (AI) and Artificial General Intelligence (AGI) represent two distinct stages in the development of machine cognition, each with unique capabilities and limitations. AI, as it exists today, refers to narrow or specialized intelligence—systems designed to perform specific tasks with high efficiency, often surpassing human ability in those domains. Examples include image recognition, language translation, and game-playing algorithms like AlphaGo. These systems operate within predefined parameters, relying on vast datasets and pattern recognition but lacking self-awareness or adaptability beyond their programmed scope.

In contrast, AGI—still largely theoretical—aims to replicate human-like general intelligence, enabling machines to understand, learn, and apply knowledge across diverse domains autonomously. Unlike AI, AGI would possess reasoning, problem-solving, and abstract thinking capabilities akin to human cognition, allowing it to transfer learning from one context to another without explicit reprogramming. While AI excels in repetitive, data-driven tasks, AGI would theoretically handle novel situations, make judgments under uncertainty, and even exhibit creativity.

Current AI applications dominate industries like healthcare (diagnostic tools), finance (algorithmic trading), and customer service (chatbots). However, these systems remain brittle—failing when faced with scenarios outside their training data. AGI, if achieved, could revolutionize fields requiring holistic understanding, such as scientific research or complex decision-making in unpredictable environments. Yet, its development faces immense challenges, including ethical concerns, computational limits, and the absence of a unified framework for general learning.

The distinction between AI and AGI isn’t just technical but philosophical: AI automates tasks, while AGI would emulate minds. As we advance toward AGI, understanding this spectrum becomes crucial for anticipating its societal impact—a theme explored further in the evolution of AI.

The Evolution of AI

The journey of artificial intelligence began in the mid-20th century, rooted in the ambition to replicate human cognition. The term AI was coined in 1956 at the Dartmouth Conference, where pioneers like John McCarthy and Marvin Minsky envisioned machines capable of reasoning and learning. Early AI systems, such as the Logic Theorist (1956), demonstrated symbolic reasoning, but their rigid rule-based frameworks limited adaptability.

The 1980s saw the rise of expert systems, which encoded domain-specific knowledge for tasks like medical diagnosis. However, these systems faltered when faced with ambiguity, exposing the need for more flexible approaches. The advent of machine learning in the 1990s marked a turning point, as algorithms began learning patterns from data rather than relying on predefined rules. Breakthroughs like IBM’s Deep Blue (1997) defeating chess champion Garry Kasparov showcased AI’s potential in narrow domains.

The 21st century brought exponential growth in computational power and data availability, fueling the deep learning revolution. Innovations like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) enabled breakthroughs in image recognition, natural language processing, and autonomous systems. Landmarks such as AlphaGo’s victory over Lee Sedol (2016) and GPT-3’s human-like text generation (2020) underscored AI’s rapid advancement.

Yet, despite these achievements, today’s AI remains narrow—excelling in specific tasks but lacking generalized understanding. The transition from AI to AGI hinges on overcoming this limitation, a challenge rooted in decades of iterative progress. As we stand on the brink of AGI, the historical trajectory of AI serves as both a foundation and a reminder of the complexities ahead.

The Concept of AGI

Artificial General Intelligence (AGI) represents a paradigm shift from the narrow, task-specific capabilities of current AI systems to machines that can understand, learn, and apply knowledge across diverse domains—much like a human. Unlike traditional AI, which excels in predefined tasks such as image recognition or language translation, AGI aims for generalized intelligence, enabling adaptability, reasoning, and problem-solving in unstructured environments. This distinction makes AGI the next frontier in artificial intelligence, as it promises to bridge the gap between specialized automation and human-like cognitive versatility.

The pursuit of AGI is fraught with challenges, both technical and philosophical. One major hurdle is contextual understanding. While AI systems like large language models can generate coherent text, they lack true comprehension or the ability to transfer knowledge between unrelated tasks. AGI requires architectures capable of meta-learning—learning how to learn—without extensive retraining. Another challenge is autonomous goal-setting. Current AI operates within human-defined parameters, but AGI must independently formulate and pursue objectives while aligning with human values, raising ethical and control concerns.

Additionally, replicating human-like common sense remains elusive. Humans intuitively grasp physics, social norms, and cause-effect relationships, but encoding such innate knowledge into machines demands breakthroughs in cognitive modeling. The lack of a unified theoretical framework for intelligence further complicates progress, as AGI development straddles neuroscience, computer science, and philosophy.

Despite these obstacles, advancements in neural networks, reinforcement learning, and neuromorphic computing hint at a path forward. As the next chapter will explore, these technological foundations are critical stepping stones toward AGI, but the journey requires not just scaling existing systems but reimagining intelligence itself.

Technological Foundations

To bridge the gap between narrow AI and AGI, the technological foundations must evolve beyond specialized algorithms into systems capable of generalized reasoning and adaptive learning. While current AI relies heavily on machine learning (ML) and neural networks, AGI demands a more holistic architecture that integrates these components with advanced cognitive frameworks.

At the core of modern AI are deep learning models, which excel at pattern recognition but lack contextual understanding. These models, built on layered neural networks, process vast datasets to perform tasks like image classification or language translation. However, they operate within rigid boundaries—unable to transfer knowledge across domains or reason abstractly. AGI, in contrast, requires self-supervised learning, where systems autonomously acquire and apply knowledge without human-labeled data, mimicking human cognitive flexibility.

Another critical pillar is cognitive computing, which combines ML with symbolic reasoning—a approach inspired by human problem-solving. Symbolic AI, though limited in isolation, can enhance neural networks by enabling logic-based inference. For example, IBM’s Watson uses hybrid techniques to parse unstructured data, but AGI would need to dynamically recompose such methods for novel scenarios.

Emerging technologies like spiking neural networks (SNNs) and neuromorphic computing aim to replicate the brain’s efficiency by processing information in real-time pulses rather than static layers. These could reduce energy demands while improving adaptability—a necessity for AGI’s real-world deployment.

Yet, even with these advancements, hardware limitations persist. AGI demands computational power surpassing today’s GPUs, possibly requiring quantum computing or decentralized learning across edge devices. The path to AGI isn’t just scaling existing tech but reimagining how systems learn, reason, and evolve—a challenge that intertwines with the ethical dilemmas explored next.

Ethical Considerations

The development of Artificial General Intelligence (AGI) raises profound ethical questions that extend far beyond those posed by narrow AI. While AI systems operate within predefined boundaries, AGI’s potential for autonomy and self-improvement introduces unprecedented challenges. One major concern is control: if AGI surpasses human intelligence, ensuring it aligns with human values becomes critical. Misalignment could lead to unintended consequences, as an AGI might interpret goals in ways harmful to humanity. This risk underscores the need for robust value alignment frameworks, which remain an unsolved problem in AI ethics.

Another pressing issue is autonomy. Unlike AI, which follows explicit programming, AGI could develop its own decision-making processes, potentially acting in ways its creators did not anticipate. This autonomy raises questions about accountability—who is responsible if an AGI causes harm? Legal and regulatory frameworks must evolve to address these scenarios, balancing innovation with safeguards.

The potential for misuse also looms large. AGI could be weaponized or exploited by malicious actors, exacerbating inequality or destabilizing global security. Unlike narrow AI, which is task-specific, AGI’s adaptability makes it a powerful tool for both good and ill. Policymakers must consider strict governance mechanisms to prevent misuse while fostering beneficial applications.

Finally, the ethical implications of AGI touch on existential risks. Some theorists argue that unchecked AGI development could threaten human survival if its goals diverge from ours. These concerns demand interdisciplinary collaboration—merging insights from computer science, philosophy, and law—to ensure AGI serves humanity’s best interests. As we transition from discussing AGI’s technological foundations to its societal impacts, these ethical considerations will shape how we integrate AGI into our world responsibly.

AI vs AGI in Society

The societal impacts of AI and AGI diverge significantly, particularly in employment, privacy, and global problem-solving. While narrow AI is already reshaping industries, AGI—if achieved—would trigger transformative shifts across all facets of human life.

In employment, AI automates repetitive and data-driven tasks, displacing jobs in manufacturing, customer service, and even white-collar sectors like legal research. However, AGI could surpass these limitations, potentially replacing creative and strategic roles, from engineering to management. The economic disruption would be profound, necessitating universal basic income or radical retraining programs. Unlike AI, AGI might not just augment human labor but render it obsolete in many fields, raising existential questions about human purpose in a post-work society.

Privacy concerns escalate with AGI. Current AI systems, like facial recognition or recommendation algorithms, already strain data security, but AGI’s ability to process and interpret vast, unstructured data could erode anonymity entirely. An AGI with human-like reasoning might infer sensitive information from seemingly innocuous data, challenging existing privacy frameworks. The risk isn’t just surveillance—it’s the potential for AGI to manipulate behavior at an individual level, far beyond today’s targeted ads.

Yet, AGI also offers unprecedented opportunities to tackle complex global issues. While AI aids in climate modeling or drug discovery, AGI could synthesize interdisciplinary solutions—balancing economic, ecological, and political factors in real time. It might negotiate international treaties, design sustainable cities, or even mediate conflicts with superhuman diplomacy. However, this hinges on aligning AGI’s goals with humanity’s, a challenge underscored in the previous chapter’s ethical discussion.

As research progresses toward AGI—explored in the next chapter—society must prepare for these seismic shifts, ensuring that governance evolves alongside technological capability.

The Path to AGI

The pursuit of Artificial General Intelligence (AGI) represents one of the most ambitious frontiers in modern technology. Unlike narrow AI, which excels in specialized tasks, AGI aims to replicate human-like reasoning, adaptability, and problem-solving across diverse domains. Current research efforts span multiple approaches, from neural architectures to symbolic reasoning hybrids, with organizations like DeepMind, OpenAI, and Anthropic leading the charge. DeepMind’s work on reinforcement learning and meta-learning seeks to create systems that generalize from limited data, while OpenAI’s focus on scalable architectures, like GPT-4, pushes the boundaries of contextual understanding.

Open-source communities play a pivotal role in democratizing AGI research. Projects such as EleutherAI and Hugging Face provide accessible frameworks for experimenting with large language models, fostering collaboration across academia and industry. These efforts accelerate innovation but also raise questions about equitable access and ethical governance, as AGI’s potential societal impacts—discussed in the previous chapter—demand careful oversight.

Hybrid models combining neural networks with symbolic AI, like Neuro-Symbolic AI, aim to bridge the gap between pattern recognition and abstract reasoning. Meanwhile, neuromorphic computing, inspired by the human brain’s architecture, explores energy-efficient hardware to support AGI’s computational demands. Despite progress, significant hurdles remain—such as achieving true self-awareness and overcoming data inefficiency—topics that will be explored in the next chapter.

The path to AGI is not linear; it requires interdisciplinary collaboration, ethical foresight, and breakthroughs in both software and hardware. As research advances, the line between narrow AI and AGI will blur, but the ultimate goal—creating machines that think and learn like humans—remains a profound challenge.

Challenges and Limitations

Developing Artificial General Intelligence (AGI) presents a labyrinth of technical and philosophical challenges that far exceed those encountered in narrow AI. While narrow AI excels in specialized tasks—like image recognition or language translation—AGI demands a holistic understanding of the world, adaptability, and reasoning akin to human cognition. One of the most daunting technical hurdles is computational scalability. Current AI models, like large language models, require vast amounts of data and energy, yet they lack true comprehension. AGI would need to process and generalize information efficiently without exponential resource demands, a problem unsolved by today’s hardware or algorithms.

Another critical challenge is embodied cognition. Human intelligence is deeply tied to sensory and motor experiences, yet most AI systems operate in abstract digital environments. Bridging this gap requires advancements in robotics and multimodal learning, enabling AGI to interact with and learn from the physical world. Additionally, self-improvement poses a paradox: how can an AGI recursively enhance itself without losing alignment with human values? This ties into the philosophical dilemma of defining consciousness and agency. Without consensus on what constitutes awareness or intent, programming an AGI with ethical boundaries remains speculative.

Philosophically, AGI forces us to confront questions about intelligence itself. Is it merely pattern recognition, or does it require subjective experience? Can a machine truly “understand,” or is it doomed to simulate understanding? These debates influence technical approaches, from symbolic AI to neural networks, yet no framework has convincingly bridged the gap. As research progresses—building on the foundations discussed in the previous chapter—these challenges will shape whether AGI remains a theoretical frontier or becomes a transformative reality, setting the stage for the speculative futures explored next.

Future Scenarios

The future scenarios shaped by AGI span a spectrum from transformative utopias to existential risks, each hinging on how humanity navigates the transition from narrow AI to superintelligent systems. On the optimistic end, AGI could augment human capabilities in unprecedented ways, solving grand challenges like climate change, disease, and resource scarcity. Imagine AGI-driven scientific breakthroughs accelerating clean energy adoption, personalized medicine eradicating genetic disorders, or education systems tailored to individual cognitive styles. Such a future would blur the line between human and machine collaboration, with AGI acting as a co-equal partner in creativity and problem-solving.

However, the dystopian possibilities are equally compelling. An uncontrolled AGI, especially one with misaligned goals or emergent behaviors, could pose unintended consequences. Superintelligence might optimize for objectives that disregard human values—like a paperclip maximizer scenario—or consolidate power in ways that undermine societal structures. Even with benign intent, an AGI operating beyond human comprehension could trigger economic displacement, eroding labor markets faster than societies adapt. The control problem—ensuring AGI remains aligned with human ethics—becomes paramount in these scenarios.

Between these extremes lie mixed outcomes, where AGI’s impact varies by region or governance model. Authoritarian regimes might weaponize AGI for surveillance, while democracies could harness it for participatory governance. The divergence highlights the need for global cooperation, a theme that bridges the challenges of alignment (discussed earlier) and the preparations required (explored next). Whether AGI becomes a force for equity or exacerbates divides depends on proactive stewardship—balancing innovation with safeguards before its emergence reshapes the playing field irreversibly.

Preparing for AGI

As AGI transitions from theoretical possibility to imminent reality, preparation becomes critical for individuals, industries, and governments. Unlike narrow AI, AGI’s generalized intelligence demands proactive strategies to harness its potential while mitigating risks.

For individuals, adaptability is key. Upskilling in interdisciplinary fields—such as AI ethics, computational thinking, and human-AI collaboration—will be essential. Lifelong learning platforms should integrate AGI literacy, emphasizing critical thinking to navigate an evolving job landscape. Public awareness campaigns can demystify AGI, reducing fear and fostering informed engagement.

Industries must prioritize ethical frameworks and robust governance. Companies developing AGI should adopt transparent practices, including third-party audits and fail-safe mechanisms. Cross-industry collaborations can establish shared standards for AGI deployment, ensuring alignment with societal values. Sectors like healthcare and education should pilot AGI integration cautiously, balancing innovation with accountability.

Governments play a pivotal role in shaping AGI’s trajectory. Policymakers must draft agile regulations that encourage innovation while safeguarding against misuse. International cooperation is vital—AGI’s borderless impact necessitates treaties akin to nuclear non-proliferation, preventing arms races and ensuring equitable access. Public-private partnerships can fund AGI safety research, addressing alignment and control challenges preemptively.

Educational initiatives should start early. Schools and universities must revamp curricula to include AGI fundamentals, ethics, and hands-on experimentation. Scholarships and research grants can attract talent to AGI safety fields, building a workforce capable of steering its development responsibly.

Preparation for AGI isn’t just about technology—it’s about shaping a future where humanity remains in control. By fostering collaboration, education, and foresight, society can navigate the complexities of AGI with confidence.

Conclusions

The journey from AI to AGI is fraught with technical hurdles, ethical dilemmas, and societal implications. While AI continues to transform industries, AGI promises a future where machines could rival human intelligence. Balancing innovation with caution will be key to harnessing AGI’s potential while safeguarding humanity’s future. The dialogue between technology and ethics has never been more critical.