Analog, Digital, and Hybrid Computers Understanding the Evolution and Application

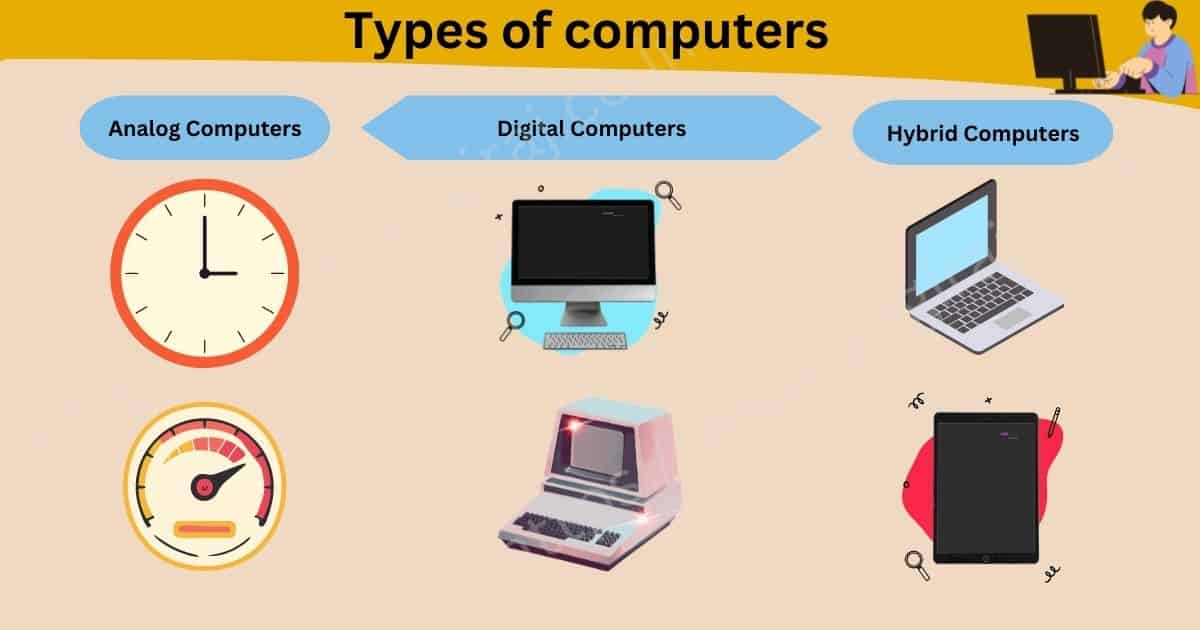

From the mechanical gears of analog computers to the binary logic of digital systems and the integrated solutions of hybrid models, computing technology has evolved dramatically. This article delves into the history, functionality, and applications of analog, digital, and hybrid computers, offering insights into their unique advantages and how they shape our technological landscape.

The Dawn of Computing Analog Beginnings

The earliest forms of computing relied not on binary digits but on continuous physical phenomena—ushering in the era of analog computers. These machines operated by modeling real-world systems through mechanical or electrical analogs, translating variables like pressure, rotation, or voltage into computational outputs. One of the most remarkable examples is the Antikythera mechanism, an ancient Greek device dating back to 100 BCE, which used intricate gear systems to predict astronomical positions and eclipses. This artifact demonstrated the potential of analog computation long before the advent of electricity.

In more recent history, the slide rule became a cornerstone of scientific and engineering calculations, enabling rapid multiplication, division, and logarithmic functions through the alignment of logarithmic scales. Its simplicity and portability made it indispensable until the mid-20th century. Meanwhile, larger-scale analog computers, such as the Differential Analyzer developed by Vannevar Bush in 1931, solved complex differential equations by using rotating shafts and gears to simulate dynamic systems. These machines were pivotal in ballistics, aerospace engineering, and early control systems.

Analog computers excelled in tasks requiring real-time simulation, where continuous data representation was more intuitive than discrete digital logic. However, their precision was limited by mechanical wear, electrical noise, and the difficulty of reprogramming them for new tasks. Despite these constraints, their influence persists in modern hybrid systems, which blend analog’s responsiveness with digital programmability—a bridge between two computing paradigms. As we transition to the next chapter, the limitations of analog computing would soon give way to the digital revolution, where binary logic and electronic switches redefined what machines could achieve.

Digital Revolution The Rise of Binary Computing

The transition from analog to digital computing marked a fundamental shift in how machines processed information. While analog computers relied on continuous signals to model physical phenomena, digital computers introduced a revolutionary approach: binary computation. This shift began in the mid-20th century with electromechanical machines like the Harvard Mark I (1944) and the ENIAC (1945), which used vacuum tubes and relays to perform calculations. These early digital systems were bulky and power-hungry, but they demonstrated the potential of discrete, binary logic—where data is represented as zeros and ones.

The real breakthrough came with the invention of the transistor in 1947, which replaced vacuum tubes and enabled smaller, faster, and more reliable computers. By the 1960s, integrated circuits further miniaturized components, paving the way for mainframes like the IBM System/360. These machines standardized digital computing in business and science, offering unparalleled precision and programmability. Unlike analog systems, digital computers could execute complex algorithms, store vast amounts of data, and reproduce results with exact consistency.

The 1970s saw the rise of microprocessors, such as the Intel 4004, which brought digital computing to personal devices. This era cemented the dominance of binary logic, as software ecosystems expanded and digital systems became more accessible. The shift also transformed industries—enabling everything from real-time data processing to advanced simulations.

However, digital computing wasn’t without trade-offs. While it excelled in accuracy and scalability, it lacked the instantaneous responsiveness of analog systems in certain applications. This limitation would later inspire the development of hybrid computers, blending the best of both worlds. The digital revolution didn’t just replace analog technology—it redefined what computing could achieve.

Bridging Worlds The Concept of Hybrid Computers

Hybrid computers represent a fascinating convergence of analog and digital technologies, designed to leverage the strengths of both paradigms. While digital computers excel in precision and programmability, analog systems offer unparalleled speed in solving complex differential equations and simulating real-world phenomena. Hybrid computers bridge this gap, integrating the rapid processing of analog components with the accuracy and control of digital systems.

At their core, hybrid computers consist of an analog subsystem for real-time data processing and a digital subsystem for numerical computation and logical operations. The two communicate via interface units, such as analog-to-digital converters (ADCs) and digital-to-analog converters (DACs), ensuring seamless data exchange. This architecture allows hybrid systems to tackle problems where speed and precision are equally critical, such as in aerospace simulations, medical diagnostics, and industrial process control.

One of the most compelling applications of hybrid computing is in real-time simulation. For instance, flight simulators use analog components to model aerodynamic forces with high responsiveness while relying on digital systems for precise instrument feedback and scenario programming. Similarly, in medicine, hybrid computers enable rapid analysis of physiological signals (like ECG waveforms) while maintaining the accuracy required for diagnostics.

Despite their niche applications, hybrid computers remain relevant in fields where pure digital or analog solutions fall short. Their ability to balance computational speed with exactitude makes them indispensable in specialized domains. As computing evolves, hybrid architectures may see renewed interest, particularly in areas like quantum computing and AI-driven simulations, where blending continuous and discrete processing could unlock new capabilities.

This chapter sets the stage for a deeper exploration of analog computers, which, despite their decline, continue to serve critical roles in specific industries—a topic we will examine next.

Analog Computers in Detail

Analog computers, though largely overshadowed by their digital counterparts, remain a fascinating and historically significant branch of computing. Unlike digital systems that process discrete binary data, analog computers operate using continuous physical quantities such as voltage, current, or mechanical motion to represent variables. This allows them to model real-world systems in real time, making them exceptionally suited for simulating dynamic processes.

The core components of an analog computer include operational amplifiers (op-amps), potentiometers, and integrators, which work together to solve differential equations. By configuring these components in specific ways, engineers can replicate complex systems—such as aerodynamic forces on an aircraft or electrical circuit behaviors—without the need for digital discretization. This real-time processing capability made analog computers indispensable in mid-20th-century aviation, where they were used for flight simulation and autopilot systems.

Despite their decline in general computing, analog computers still find niche applications. In education, they serve as powerful tools for teaching control theory and differential equations, offering students a tangible way to visualize mathematical concepts. Additionally, certain modern hybrid systems—as discussed in the previous chapter—leverage analog components for high-speed signal processing while relying on digital systems for precision and programmability.

While digital computers dominate today’s landscape due to their versatility and scalability, analog systems remind us that not all problems require binary logic. Their legacy persists in specialized domains where continuous modeling provides unmatched efficiency, bridging the gap between theoretical mathematics and real-world phenomena. The next chapter will explore how digital architectures have capitalized on precision and programmability to redefine computing.

Digital Computers Unpacked

Digital computers, unlike their analog counterparts, process information using discrete binary values—0s and 1s—making them highly precise and versatile. At their core, digital computers rely on a structured architecture comprising the Central Processing Unit (CPU), memory, and peripheral devices. The CPU acts as the brain, executing instructions fetched from memory, performing arithmetic and logic operations, and managing data flow. Modern CPUs integrate multiple cores, enabling parallel processing for enhanced performance.

Memory in digital computers is hierarchical, ranging from fast but volatile RAM (Random Access Memory) to slower but persistent storage devices like SSDs and HDDs. Cache memory, embedded within the CPU, further accelerates data access by storing frequently used instructions. Peripheral devices—keyboards, monitors, and printers—facilitate interaction between users and the machine, while buses and interfaces like USB and PCIe ensure seamless communication between components.

The dominance of digital computers stems from their programmability, scalability, and reliability. Unlike analog systems, which excel in continuous data processing, digital computers handle discrete tasks with unmatched accuracy. This makes them ideal for general-purpose computing, from running operating systems to executing complex algorithms in fields like artificial intelligence and big data analytics. Their binary foundation also simplifies error detection and correction, ensuring robust performance in critical applications.

As we transition from analog to hybrid systems, digital computers remain the backbone of modern technology. Their architecture continues to evolve, with advancements in quantum computing and neuromorphic chips pushing the boundaries of speed and efficiency. Yet, their core principles—binary logic and modular design—remain unchanged, cementing their role as the workhorses of the digital age.

Hybrid Systems How They Work

Hybrid computers represent a sophisticated fusion of analog and digital technologies, designed to leverage the strengths of both systems. At their core, they combine the continuous signal processing capabilities of analog computers with the precision and programmability of digital systems. This unique integration allows hybrid computers to excel in solving complex mathematical problems, particularly differential equations, which are cumbersome for purely digital systems due to their iterative nature.

The mechanics of hybrid computers involve real-time analog processing for dynamic equations, while digital components handle discrete logic and control functions. For example, in solving a differential equation, the analog portion rapidly computes continuous variables using operational amplifiers and integrators, while the digital unit manages data storage, user interfaces, and iterative refinements. This division of labor drastically reduces computation time compared to purely digital methods, which rely on step-by-step numerical approximations.

Key to their efficiency is the hybrid interface, which converts signals between analog and digital domains. Analog-to-digital converters (ADCs) and digital-to-analog converters (DACs) ensure seamless communication between the two subsystems. This enables applications like real-time simulation, where analog circuits model physical systems (e.g., aircraft dynamics), and digital components process control algorithms or display results.

Hybrid computers thrive in specialized fields such as scientific research, aerospace, and medical diagnostics, where speed and accuracy are critical. Their ability to handle nonlinear systems and rapidly changing variables makes them indispensable in scenarios where purely digital or analog systems fall short. As computing demands grow more complex, hybrid architectures continue to evolve, bridging the gap between real-world phenomena and digital precision.

Comparative Analysis Analog vs Digital vs Hybrid

Analog, digital, and hybrid computers each have distinct strengths and weaknesses, making them suitable for different applications. Analog computers excel in real-time processing and solving differential equations, thanks to their continuous signal processing. They are highly efficient for simulations like weather modeling or fluid dynamics, where precision isn’t as critical as speed. However, their lack of programmability and susceptibility to noise limit their versatility in modern computing.

Digital computers, on the other hand, dominate in accuracy, storage, and programmability. Their discrete binary logic allows for error-free calculations, making them ideal for tasks like data analysis, cryptography, and general-purpose computing. Yet, their sequential processing can be slower for real-time simulations compared to analog systems.

Hybrid computers bridge these gaps by combining analog speed with digital precision. They are particularly effective in medical imaging, aerospace engineering, and robotics, where real-time analog processing and digital control must coexist. For instance, hybrid systems can process sensor data in real time while simultaneously running complex algorithms—something neither pure analog nor digital systems can achieve alone.

The choice between these systems depends on the task. Analog is best for dynamic, real-time problems, digital for structured, high-precision tasks, and hybrid for scenarios demanding both. As computing evolves, hybrid systems are becoming more prominent, leveraging advancements in both domains to tackle increasingly complex challenges, as discussed in the previous chapter. The next chapter will explore how these technologies developed historically, setting the stage for today’s sophisticated systems.

Historical Milestones in Computing Technology

The journey of computing technology spans centuries, evolving from rudimentary analog devices to today’s sophisticated hybrid systems. Early analog computers, like the Antikythera mechanism (circa 100 BCE), demonstrated mechanical ingenuity for astronomical calculations. By the 19th century, devices such as Charles Babbage’s Difference Engine laid groundwork for programmable computation, though still analog in nature. The 20th century marked a turning point with Vannevar Bush’s Differential Analyzer (1931), solving complex differential equations—an analog marvel overshadowed by digital’s rise.

The digital revolution began with Konrad Zuse’s Z3 (1941), the first programmable digital computer, followed by ENIAC (1945), which introduced electronic digital processing. These machines replaced continuous signals with discrete binary logic, enabling precision and scalability. By the 1950s, transistors replaced vacuum tubes, accelerating digital dominance. However, analog persisted in niche applications like control systems, where real-time signal processing was critical.

Hybrid computing emerged in the 1960s, blending analog’s speed with digital’s accuracy. The HYCOMP 250 (1964) exemplified this, combining analog components for dynamic simulations with digital units for data storage. Aerospace and defense sectors leveraged hybrids for missile trajectory calculations, where analog handled rapid feedback and digital ensured iterative refinement.

Recent advancements integrate analog principles into digital frameworks, like neuromorphic chips mimicking neural networks. Quantum computing, though digital at core, borrows analog-like superposition principles. This convergence hints at a future where hybrid systems dominate complex, real-time applications, bridging historical divides. The evolution reflects not just technological progress but a continuous quest to balance speed, precision, and adaptability—a theme that will shape future innovations.

Modern Applications and Future Prospects

Analog, digital, and hybrid computers each play distinct yet complementary roles in modern industries, leveraging their unique strengths to solve complex problems. Analog computers, though less common today, excel in real-time simulations and control systems. Aerospace industries use them for flight simulations and autopilot systems, where continuous data processing is critical. In healthcare, analog components are embedded in medical devices like ECG monitors, converting physiological signals into actionable data with minimal latency.

Digital computers dominate data-driven fields due to their precision and scalability. In research, they power large-scale simulations, from climate modeling to particle physics, handling vast datasets with exactness. Healthcare relies on digital systems for diagnostics, imaging, and genomic analysis, where accuracy is non-negotiable. The rise of AI and machine learning further underscores their importance, as training models demands immense computational power and storage.

Hybrid computers bridge the gap, merging analog speed with digital precision. They are indispensable in real-time analytics, such as monitoring industrial processes or financial markets, where rapid analog processing feeds into digital decision-making frameworks. In medicine, hybrid systems enable advanced imaging techniques like MRI and PET scans, combining real-time signal acquisition with digital reconstruction.

Looking ahead, the convergence of these technologies promises groundbreaking advancements. Analog computing could revive in neuromorphic systems, mimicking neural networks for energy-efficient AI. Digital quantum hybrids may unlock unprecedented processing power, while hybrid architectures could optimize edge computing, bringing intelligence closer to data sources. As industries push boundaries, the synergy of analog, digital, and hybrid systems will redefine what’s possible in computing.

The Future of Computing Blending the Best of All Worlds

The future of computing lies in the seamless integration of analog, digital, and hybrid technologies, unlocking unprecedented potential across AI, quantum computing, and beyond. While digital computers dominate today’s landscape due to their precision and scalability, analog systems excel in real-time processing and energy efficiency. Hybrid architectures, which combine the strengths of both, are emerging as a powerful solution for specialized applications where neither analog nor digital alone suffices.

One of the most promising frontiers is neuromorphic computing, where analog components mimic the brain’s neural networks, enabling ultra-efficient AI processing. Digital systems then refine these outputs for accuracy, creating a symbiotic relationship. Similarly, quantum computing—which inherently relies on analog-like qubits—could benefit from hybrid interfaces to bridge the gap between quantum and classical systems, enhancing error correction and scalability.

In AI, analog computing could revolutionize edge devices by reducing power consumption while maintaining high-speed data processing. Digital frameworks would ensure robustness, enabling complex decision-making. Hybrid systems, meanwhile, might accelerate breakthroughs in real-time AI, such as autonomous vehicles or medical diagnostics, where latency and precision are critical.

Beyond AI, hybrid models could redefine scientific simulations, blending analog’s continuous modeling with digital’s discrete accuracy. Climate modeling, fluid dynamics, and material science would gain from this fusion, offering faster, more nuanced insights.

The convergence of these technologies isn’t just incremental—it’s transformative. By leveraging analog’s parallelism, digital’s reliability, and hybrid’s adaptability, we stand on the brink of a computing renaissance. The challenge lies in refining interoperability, but once mastered, this triad could redefine what’s computationally possible, pushing boundaries from quantum realms to everyday AI applications.

Conclusions

As we’ve explored, analog, digital, and hybrid computers each play pivotal roles in computing’s evolution. From analog’s precision in real-time simulations to digital’s versatility and hybrid’s balanced approach, understanding these technologies illuminates the path forward in innovation. The future of computing lies in leveraging these models’ strengths to solve increasingly complex problems.